AI

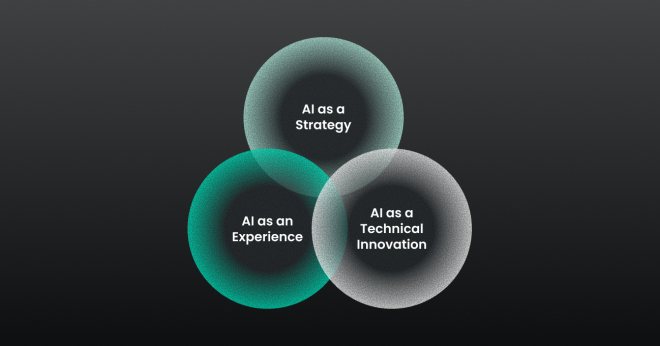

Faster and Smarter: How Appnovation uses AI to Drive Client Results

26 November, 2025|5 min Read

Speed and efficiency matter more than ever in today’s digital world - but not at the expense of accuracy, trust, or accountability. At Appnovation, we help clients keep pace with innovation by using…

Clear all filters

584 insights to be viewed

AI

08 December, 2025|5 min

What Is GEO and Why It Matters Now

AI

26 November, 2025|5 min

Faster and Smarter: How Appnovation uses AI to Drive Client Results

Creative & Experience Design

04 November, 2025|4 min

Why Marketers Can’t Ignore AI-Powered UX Design in the Age of Hyper-Personalization

Strategy & Insights

07 October, 2025|3 min

Unlocking Untapped Potential: Entering the World of AI & Data

Data & Analytics

01 October, 2025|3 min

Appno in Action: Transforming Data into a Revenue-Generating Platform for Financial Services

Strategy & Insights

24 September, 2025|3 min

Transforming Pharmaceutical Labeling: Digital ePI with FHIR Standards

Creative & Experience Design

17 September, 2025|3 min

Appno in Action: Elevating UX Through Strategic App Redesign for Pure Group

Strategy & Insights

11 September, 2025|5 min

Your Website Isn’t a Touchpoint — It’s Your Growth Engine

Creative & Experience Design

07 August, 2025|2 min

UX at Scale: Why Engagement Metrics Are the True Measure of Redesign ROI

Managed Services & Support

22 July, 2025|5 min

Your Guide to Understanding Managed Services & Support

Managed Services & Support

15 July, 2025|3 min

How Managed Services Help Our Clients Grow and Stay Ahead

Appnovation Culture

15 July, 2025|4 min